Hi. Rafael here, finally with another devlog about the game, as opposed to grants and other business stuff that no one cares about.

Guillaume and I have finally hired our first team mates (we’re 6 people now!), figured out their contracts, payroll and benefits, and started transitioning back into game development. Still, between having to manage our little startup now, having to go back and forth with the NSF and actively contributing to Tablecraft’s development, we always find a way to prioritize other things over writing devlogs. Even my attempt at streamlining the process by replacing written devlogs with podcast episodes, where all we had to do was talk about stuff, ended up getting neglected. RIP. 💀

My brain’s Department of Prioritization and Department of Self Justification released a joint brain-wide statement a while ago, notifying all other brain departments that there would be no new Tablecraft devlog posts from me in the foreseeable future. The announcement read “there is simply no time to write devlogs, we have a business to run and a game to make”.

Things would’ve worked out just fine, had it not been for John Ruiz, who, perhaps unknowingly, completely overthrew the status-quo when he recently started writing a devlog about the work that he’s doing.

“PWAAAAAAAAHHHHHHH”, was the sound of the sirens going off in my brain’s Department of Logic and Reason, after immediately realizing that for John’s devlog to make any sense, I would first have to contextualize things with a devlog of my own. Brain chaos ensued.

After a week or so of brain politics, a deal was finally struck: the Department of Logic and Reason is allowed to take control of my body for just long enough to pump out this devlog, and in return, it is forbidden from notifying my brain’s Department of Guilt and Responsibility the next time my brain’s Department of Self Justification decides it’s okay for me to go on a YouTube rabbit hole that lasts til 3 AM.

Now, with all the parties involved having finally consented to it, welcome to another Tablecraft devlog.

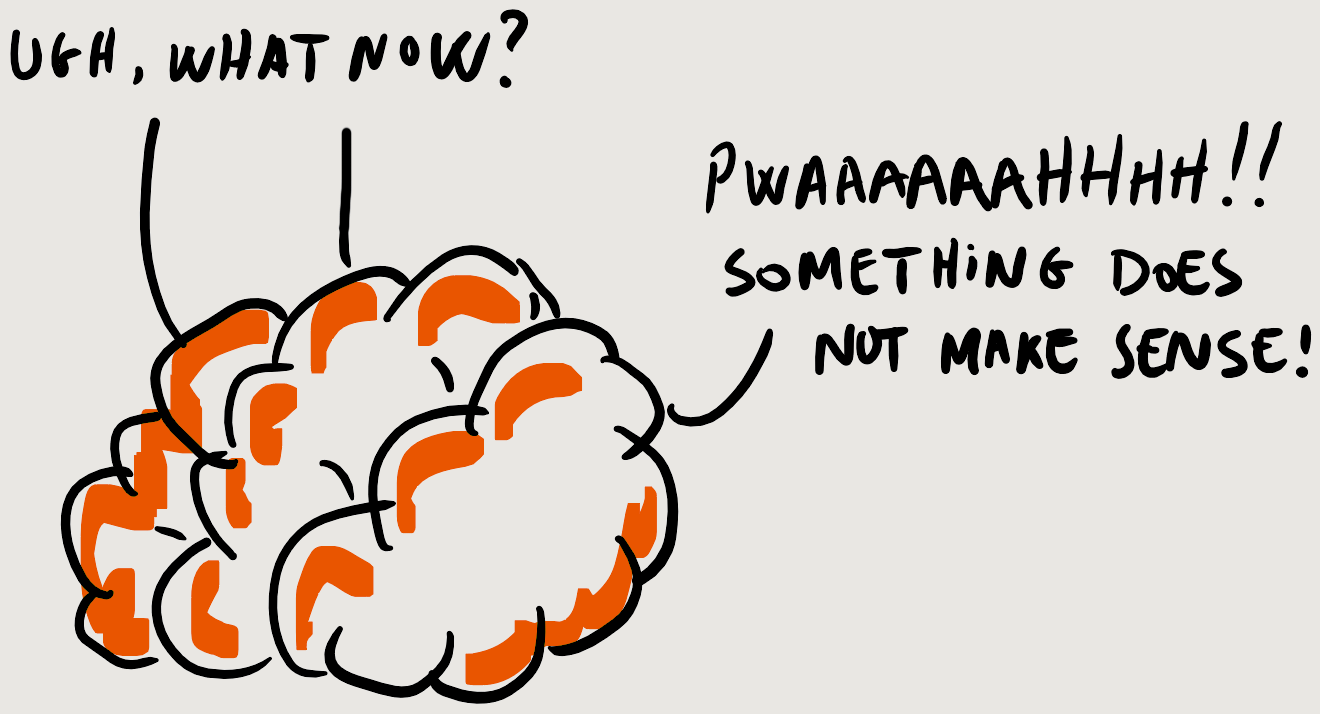

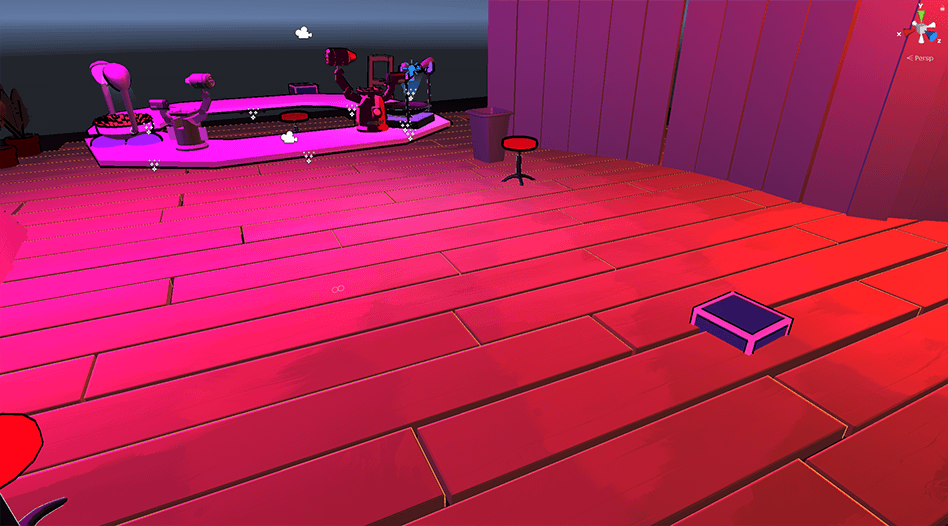

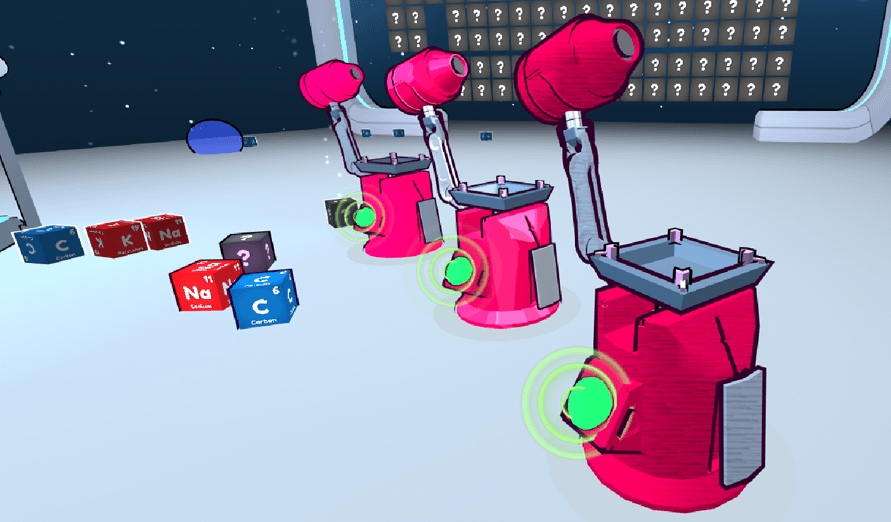

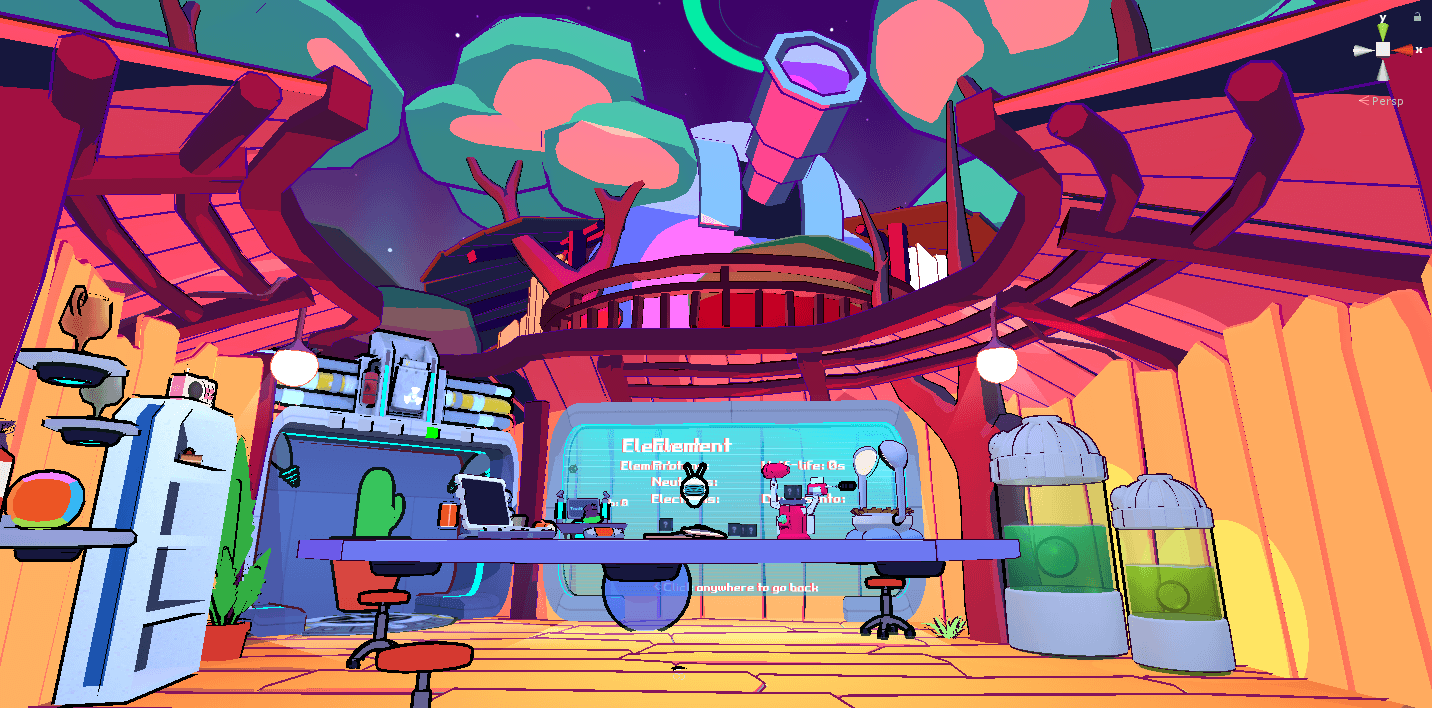

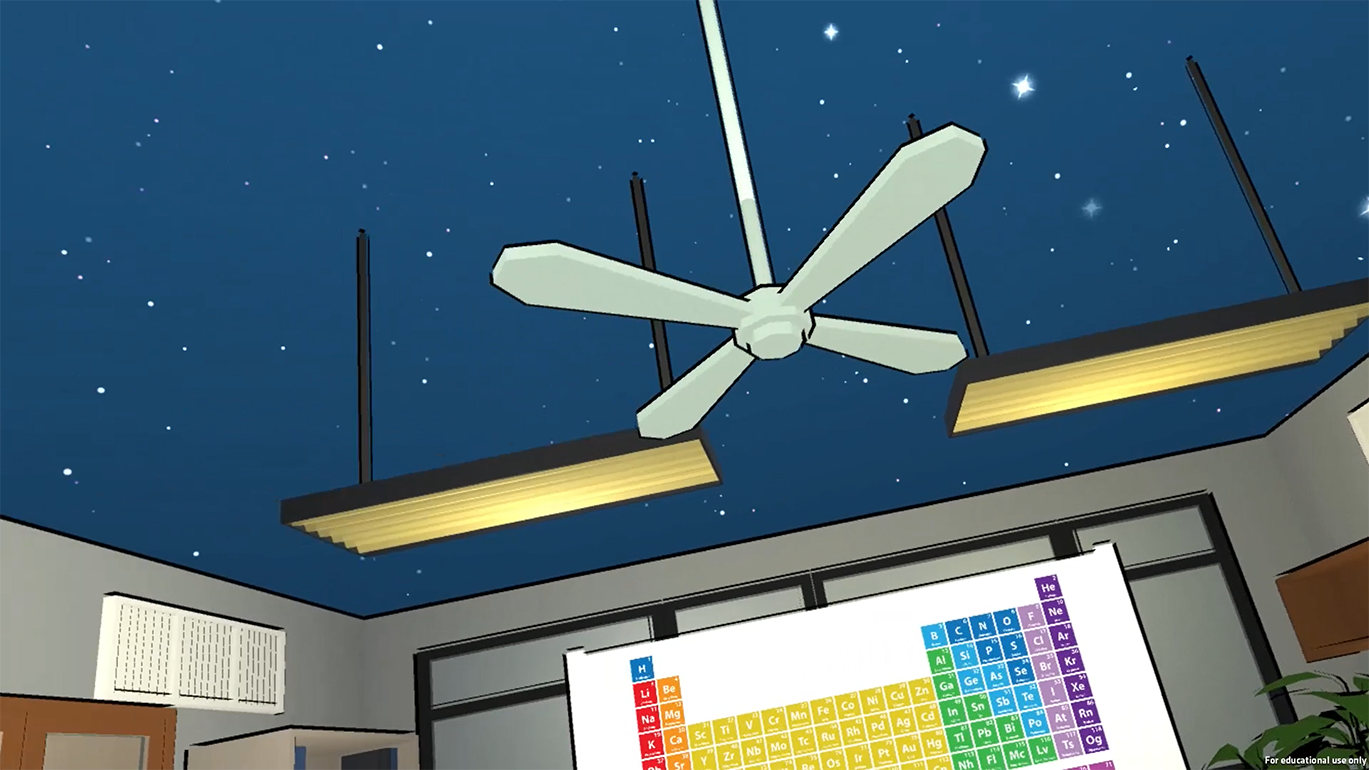

On the first devlog posted on this website (eons ago), I described how Tablecraft came to be. On that same post, I mentioned the pursuit of our tone, and what I wanted the game’s environment to feel like. At the time, I didn’t know what the environment should look like (and to a certain extent, I still don’t), but I already knew it shouldn’t feel like an enclosed space. So I did the natural thing and ripped out the ceiling of the Lab:

Much later, when John and I were randomly discussing Tablecraft (before John joined the team) and I explained to him why I had ripped out the Lab’s ceiling, he asked “what about a treehouse, then?”.

I immediately liked the idea. It could explain the giant hole in the ceiling. Plus, treehouses are awesome. If it were socially accepted for adults to live in treehouses, and I owned a tree, and I came to terms with the idea of living off the grid with no access to convenient sanitation for an indefinite period of time, I’d build a treehouse and live in it. However, because none of those things are true, and I have no knowledge of carpentry, I decided to build the treehouse in VR.

My first treehouse prototype wasn’t pretty, but it kinda got the job done. When I got in VR, it felt a lot more welcoming than the old Lab’s cold gray walls. Most people seemed to agree, except perhaps for Reddit’s r/chemistry, who apparently loved the sterile Lab environment when I first shared it there, but then never really seemed to care anymore after I replaced the wall textures with wood. Though come to think of it, it could’ve also been the pooping Blobs that ruined it for them. It’s unclear.

Whatever the case, it was clear to me, and to most non-chemists who tried the game, that the treehouse idea was the way to go. So now it was time to figure out what a proper-looking treehouse lab should look like.

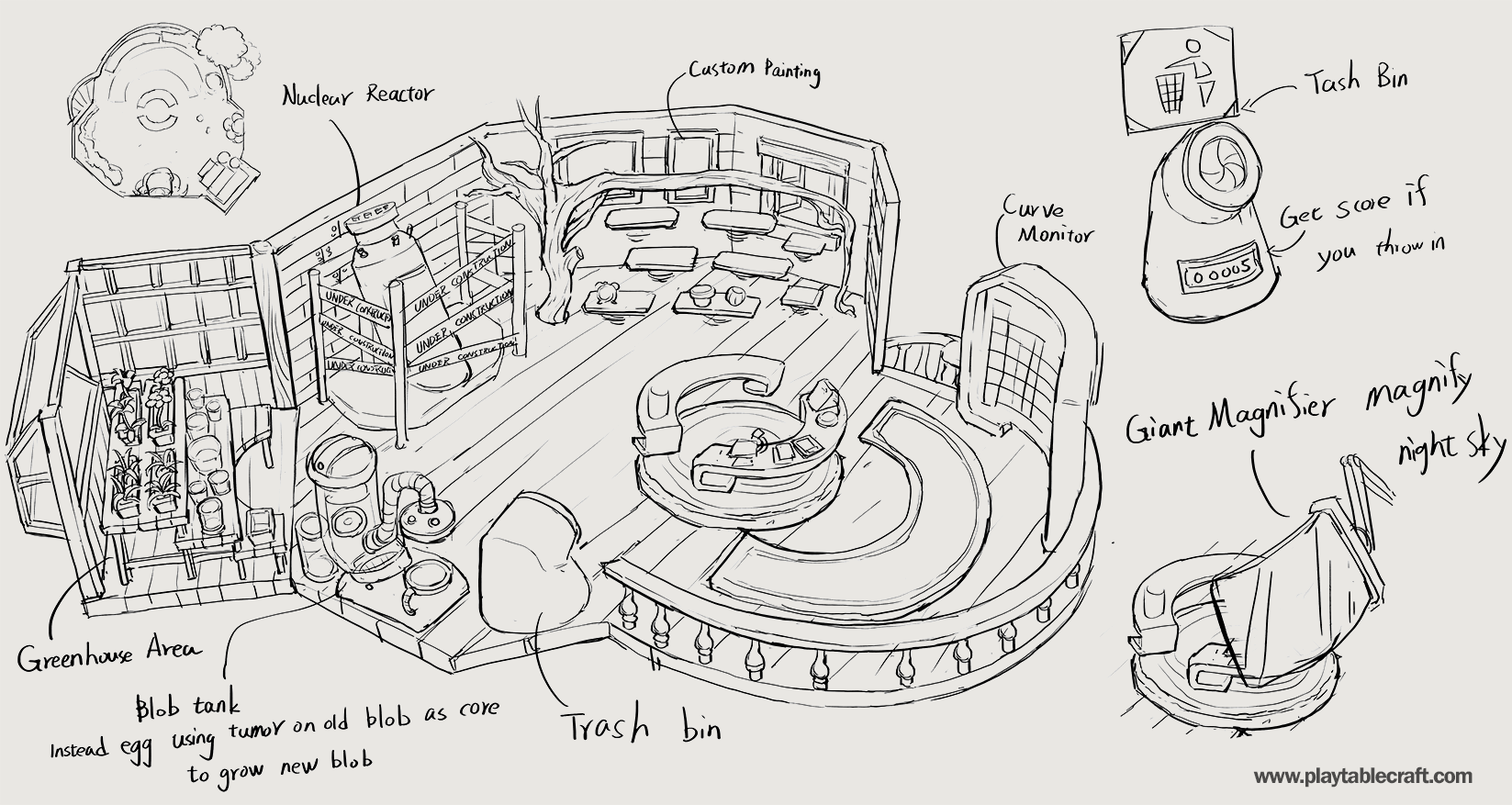

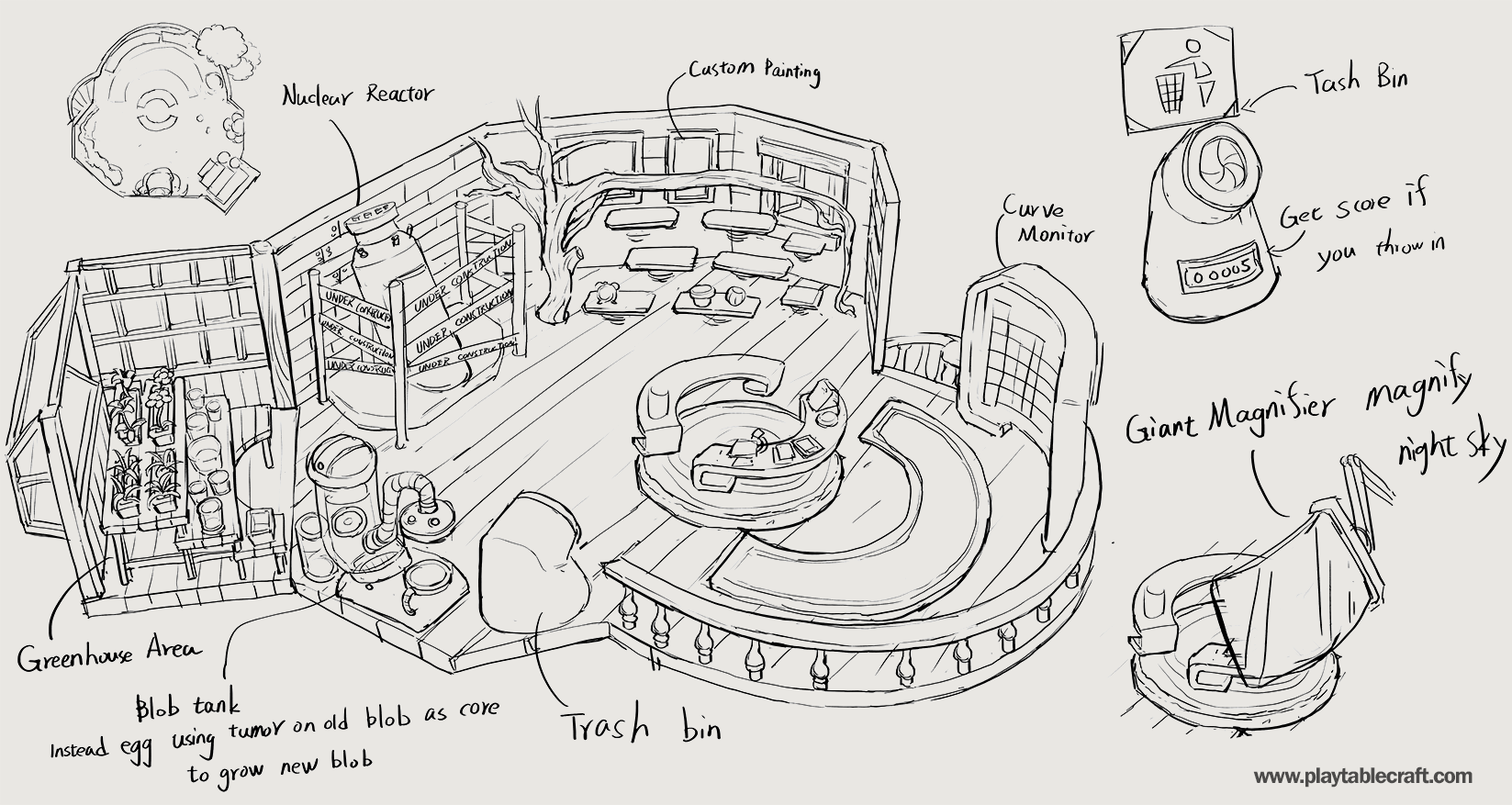

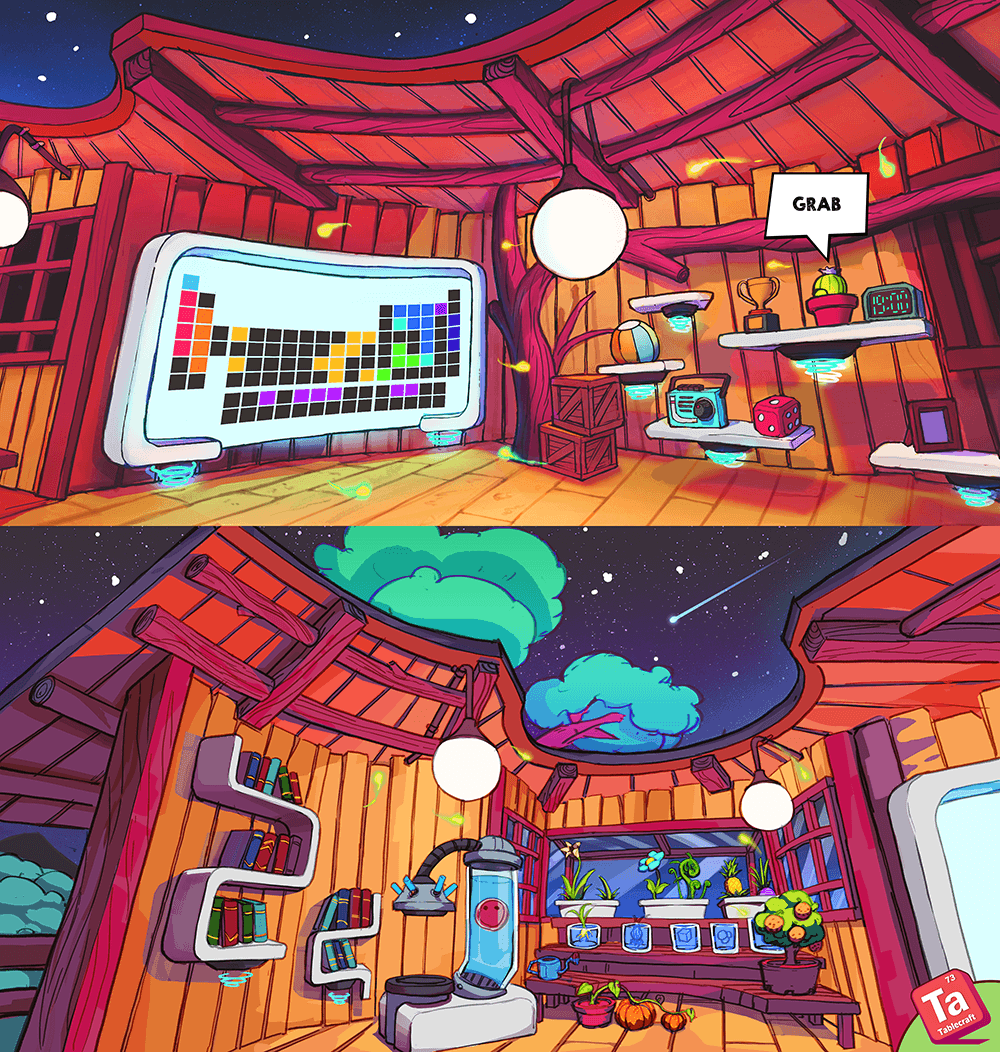

I went online and looked up labs and treehouses, and then I hired a freelance 2D artist (Heng Yi Hsieh) to come up with some sketches based on some guidelines I gave him. This was his first try, which he somehow put together in a couple of hours:

He also provided a diorama:

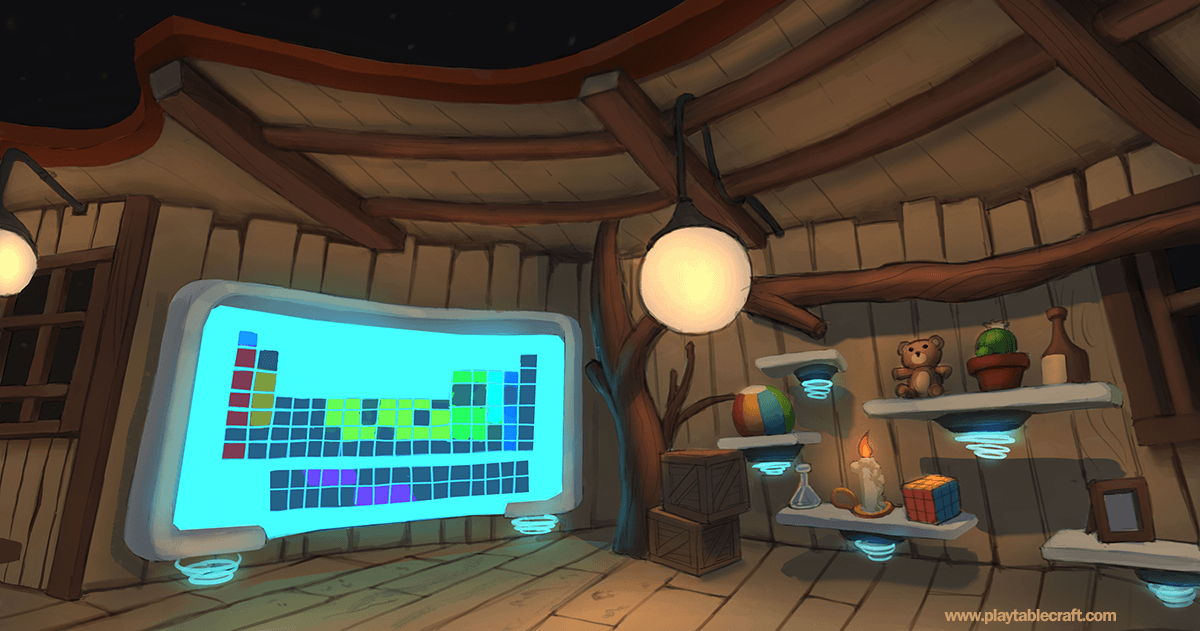

Very impressive. I then asked him to choose a couple of angles from his first person view sketch and to bring them to life with color. I must’ve goofed and failed to properly highlight the style I had in mind though, because he was clearly thinking of a different style. A spooky style:

At this point I had already almost ran out of all the money I could possibly spend on this. It didn’t take long. As a two-person company at the time (myself and Guillaume), we only had a laughably small amount of money, which we rarely used for anything other than going to conferences to showcase Tablecraft, purchasing Unity assets, software licenses and freelance work when necessary. So I told Heng Yi to take a break.

I decided to try to paint it myself, immediately taking off on a bit of a weird journey:

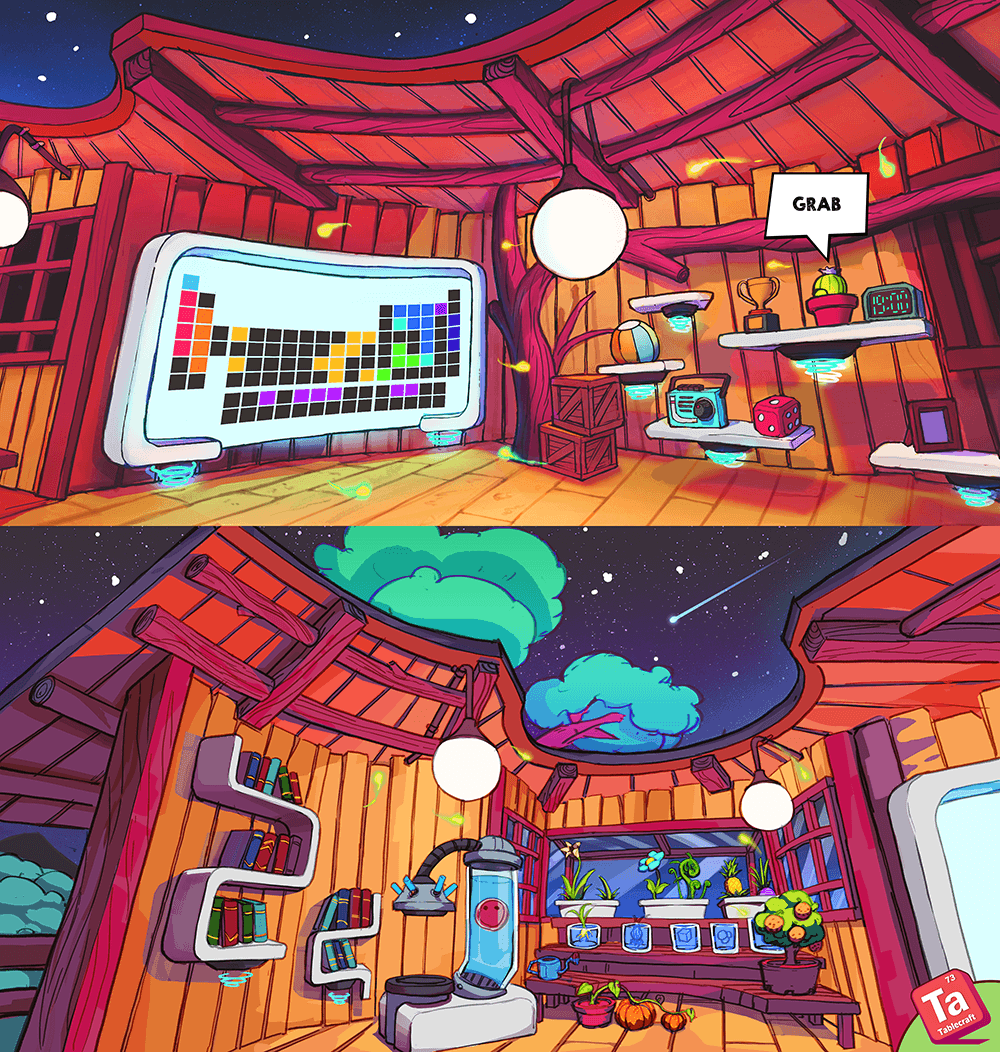

In the end, I settled with this comic-book inspired look:

I chose the comic-book aesthetic because I figured that if we managed to pull it off in VR, perhaps the game could make players feel like they’re comic-book superheroes, such as Iron Man, for example. That could be cool.

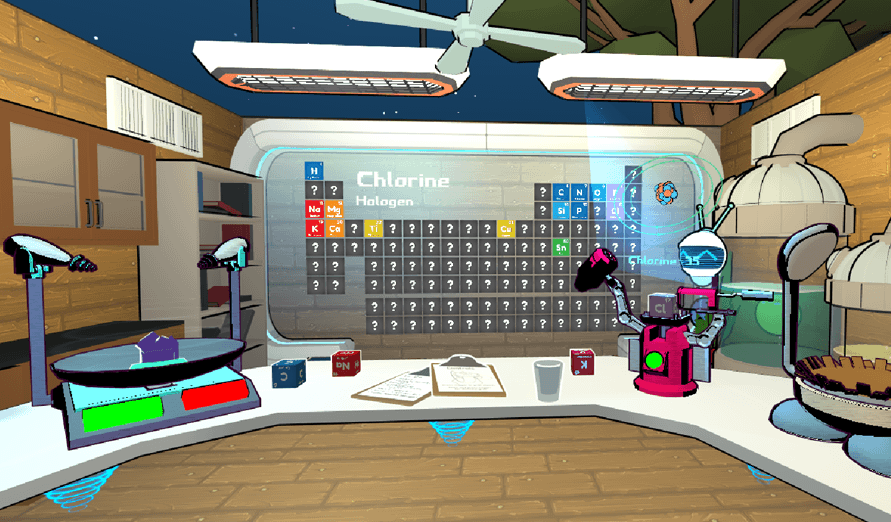

So this is where John Ruiz finally comes in again. John is a 3D artist. I showed him the 2D drawings and asked him if he would like to join our team as a permanent member. He did, and for the months following that, him and I slowly tried to bring the 2D concept to life in 3D, with John doing all the 3D modeling and me trying to figure out the colors and lighting in Unity.

Our first challenge was in figuring out what style of planks we should go with. Should the treehouse planks be straight or wonky?

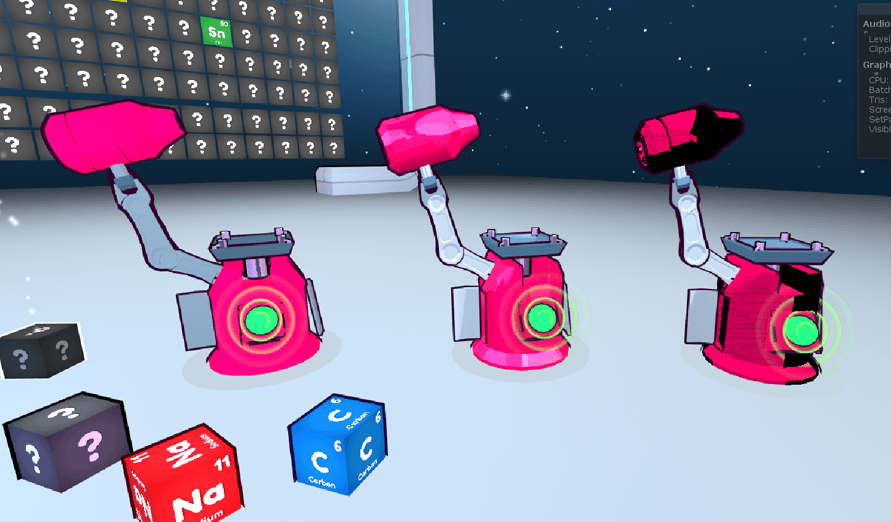

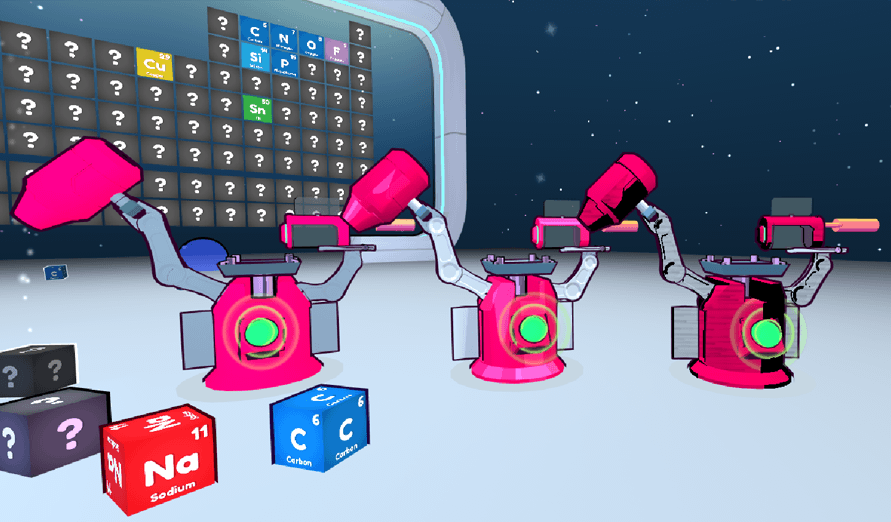

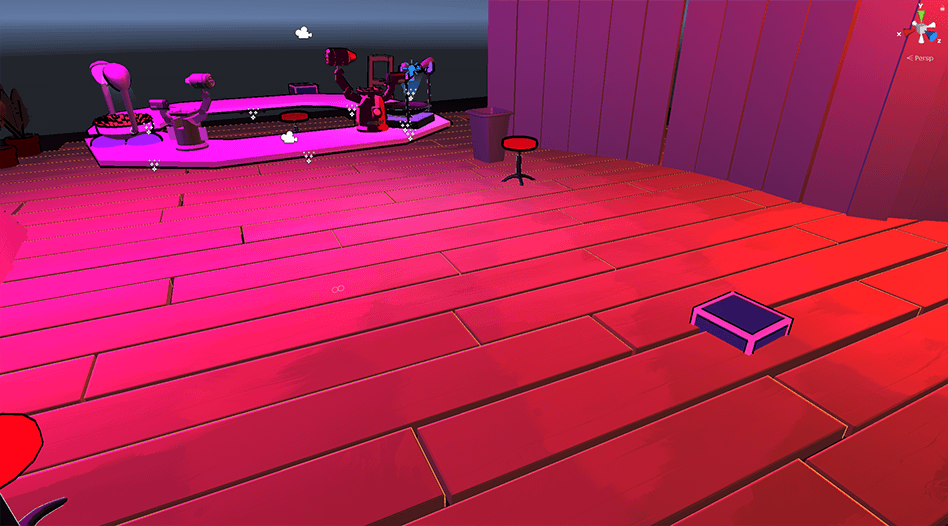

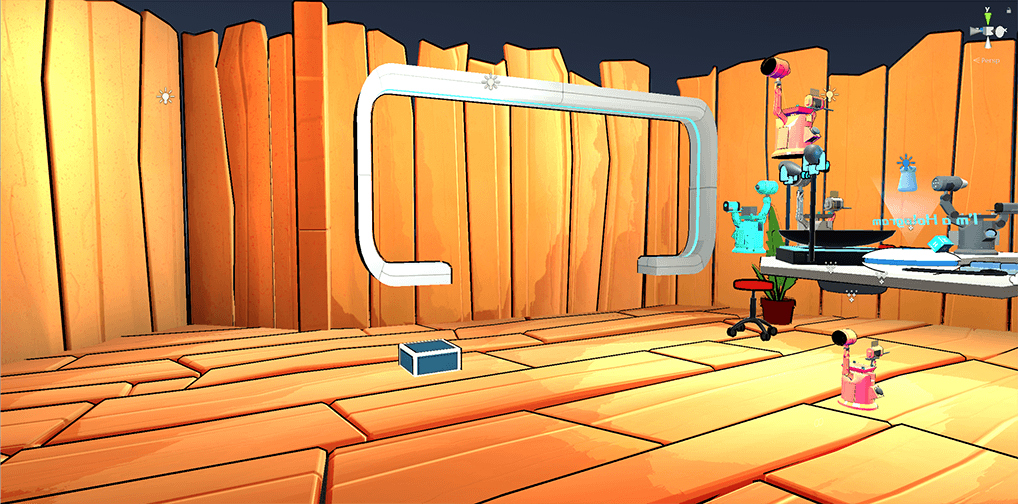

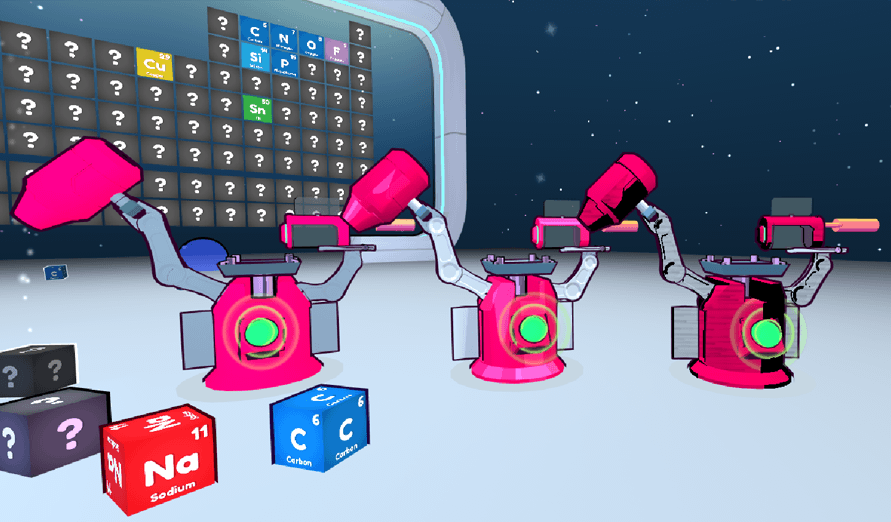

While John worked on that, I did some tests in Unity to see what kind of comic-book aesthetics we could potentially try to pull off in-engine.

I experimented with 4 different styles: completely-flat (shown above and below, to the left), cell-shaded (shown above and below, in the middle), noir-shaded-with-textures (shown above, to the right) and smooth-shaded-with-textures (shown below, to the right).

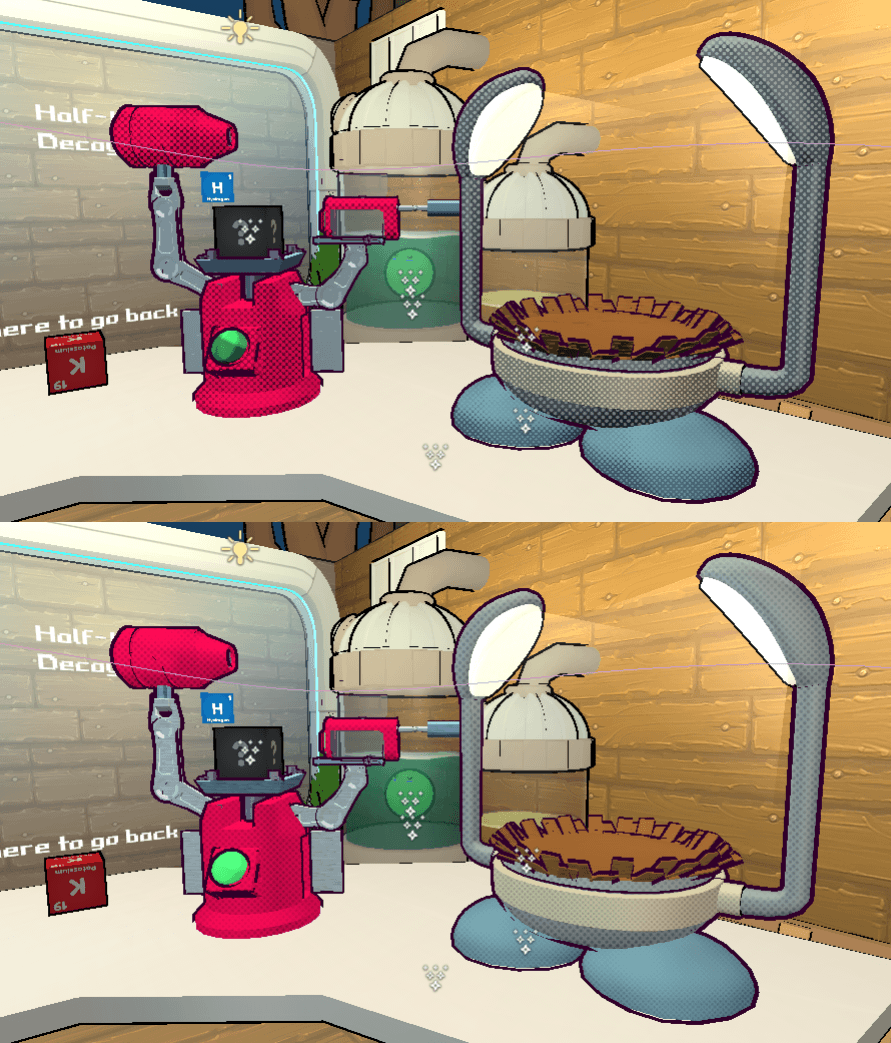

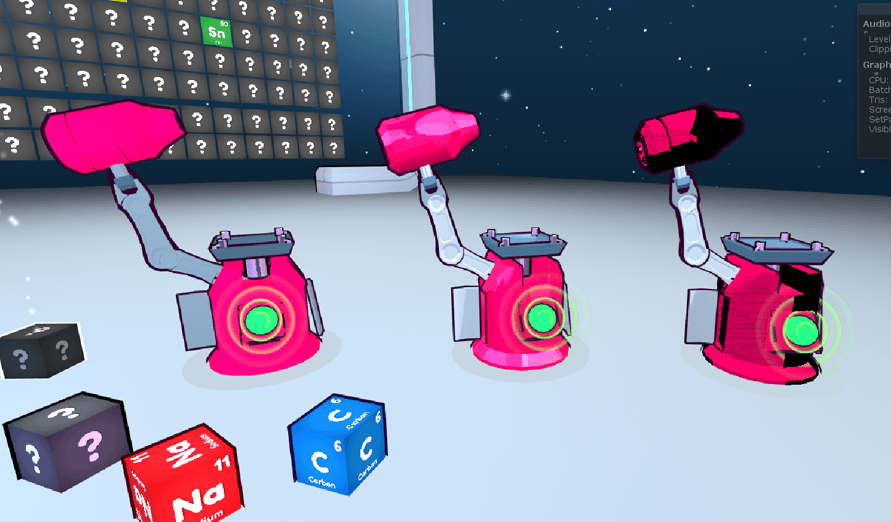

I thought the noir-shaded-with-textures look was really comic-book appropriate, so I tried that on a couple of the machines in-game. This was the result:

If we ignore the blatant clash of artstyles in the screenshot above and simply focus on the machines sitting on the desk, I suppose this could’ve been one of the paths we could’ve taken with our tone. Instead of going for a welcoming, friendly approach where the player feels like a comic-book superhero, we could’ve gone with this noir look instead, and potentially make them feel like comic-book super-villains whose parents got killed in an alley or something. It came off to me as a style that could be appropriate for mature audiences, and that’s not a bad thing.

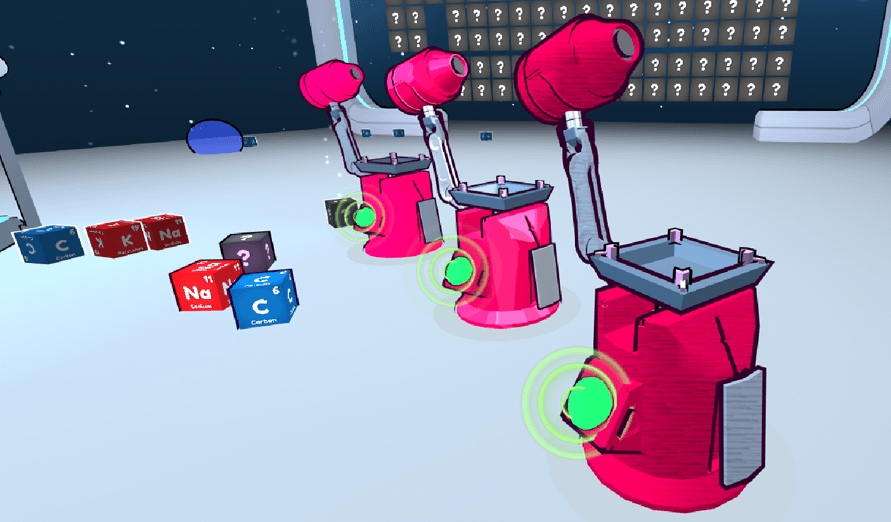

Nevertheless, it felt like too drastic a change in tone at the time, one that would be really hard to pull off successfully, so I tried to tone it down a little by experimenting with the smooth-shaded-with-textures look afterwards:

This one didn’t feel as serious as the noir look, but it also wasn’t clear whether it was successfully contributing to the tone I was looking for. The use of placeholder assets and clash of artstyles was preventing me from making a proper assessment. On top of that, I ran out of ideas for other styles I could potentially execute on, so unfortunately, for the time being, I had finally reached a dead-end and had to halt progress on this.

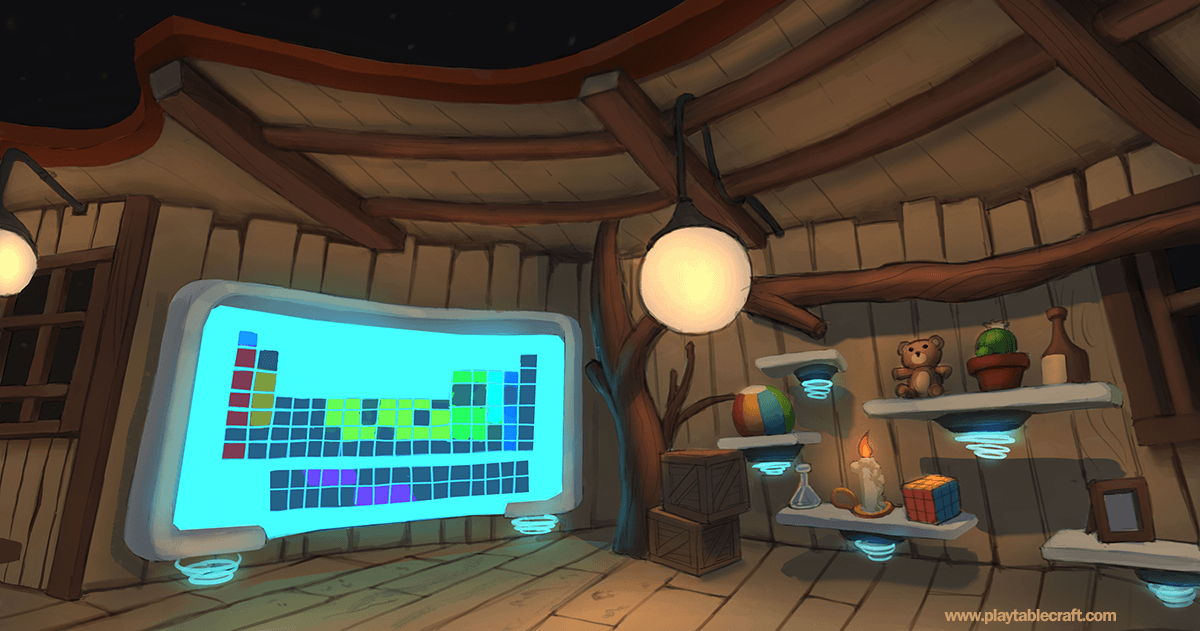

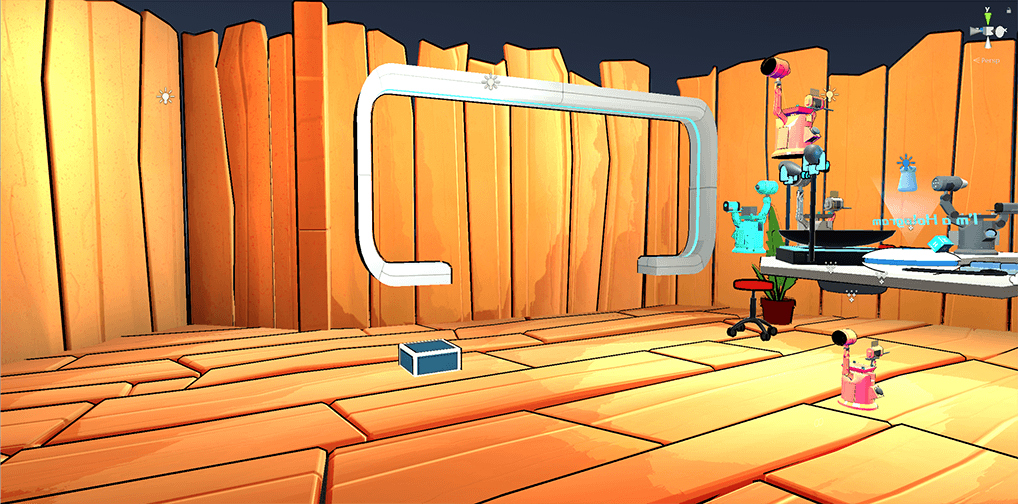

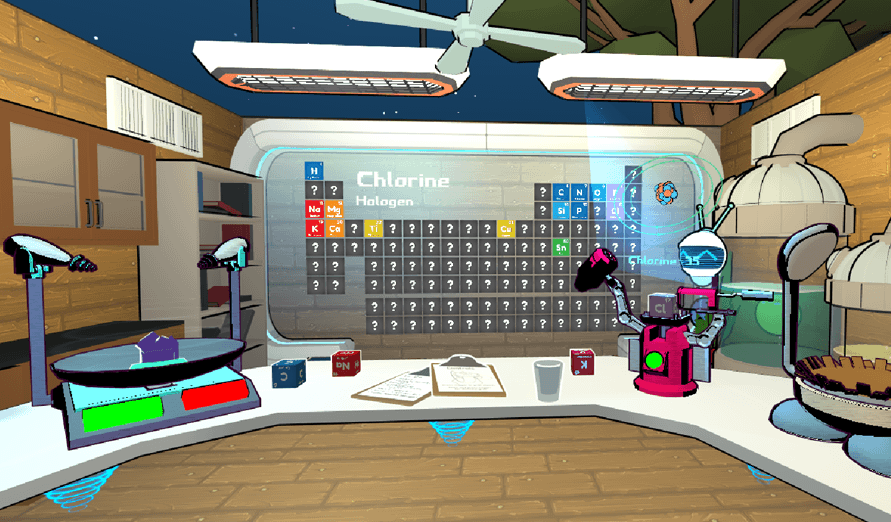

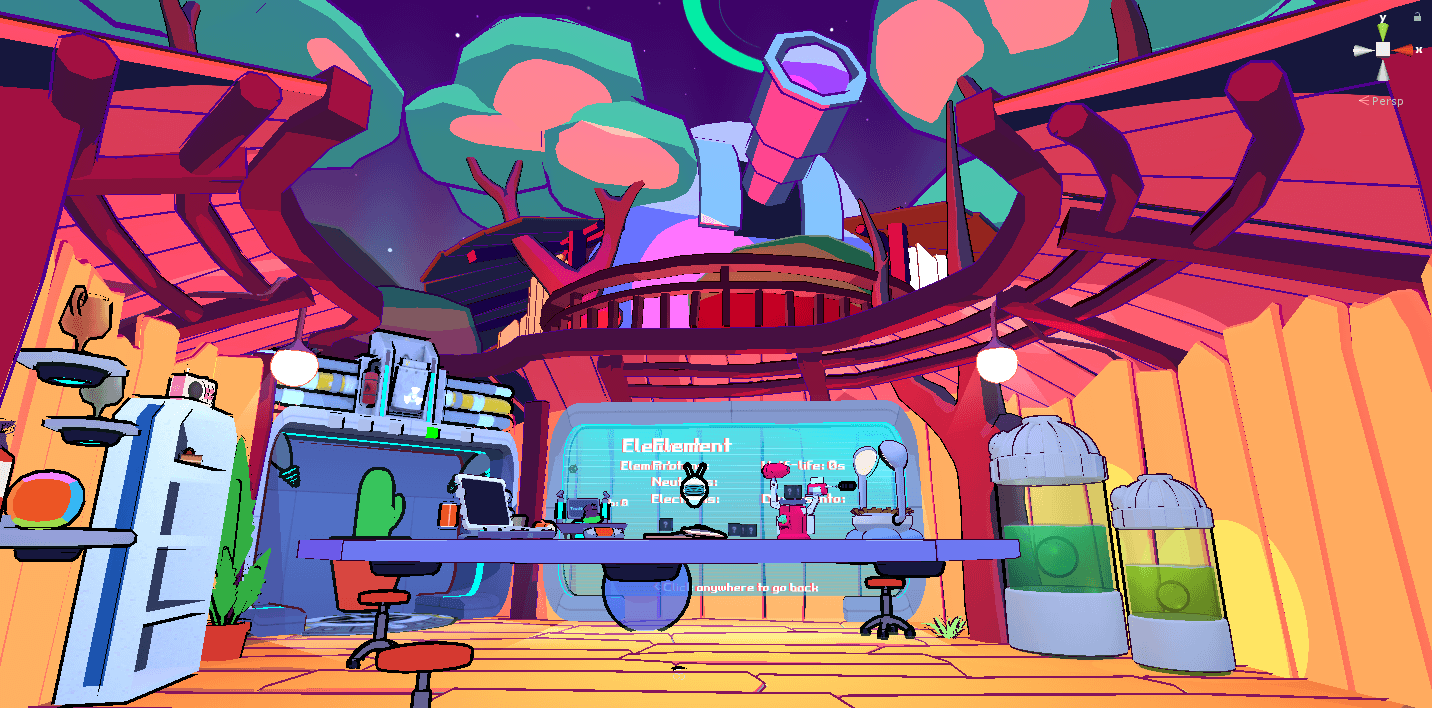

By the time I was done with my artstyle tests, John had completed a first pass of the 3D treehouse mesh, so I jumped into his Unity scene and started experimenting with real-time lighting, various toon shaders and colorful materials:

At the same time, I started prototyping the exterior of the treehouse with placeholder assets from the Unity asset store. The goal was to find out what the exterior should feel like. Should the treehouse be floating in space? Perhaps on an asteroid? Or should it be grounded and hidden in the middle of a forested valley?

Although it sounded kinda cool, the valley idea didn’t pan out. After spending some time in this version of the treehouse in VR, I felt like I was playing a hermit, hiding away from society in some deep forested area. Iron Man is more social than that. Plus, the outside of the treehouse can’t possibly be this static and dull. Ideally, the fun stuff happening in the treehouse should somehow overflow into the outside of the treehouse as well.

The problem, of course, was that I wanted to give the player a chance to explore and learn about the world that lives outside the treehouse, while at the same time remaining reluctant about the idea of adding VR movement in Tablecraft (e.g. teleportation), for reasons that I will explain in future devlogs. Luckily, in the end it all worked out, as it now seems like the answer may have been more straightforward than I thought. If you can’t teleport yourself all the way to the mountain, teleport the mountain all the way to you:

More details on that in the 2nd part of this devlog.

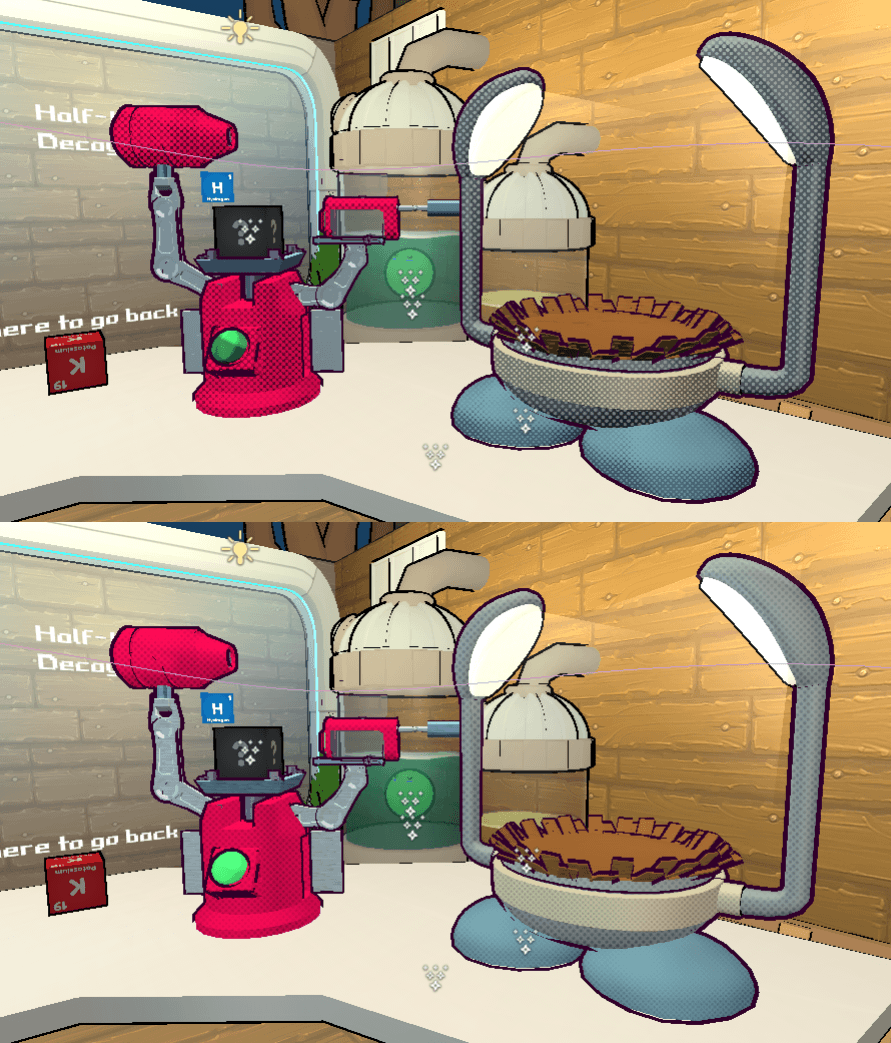

Anyway, at this point I also realized that something felt wrong about the treehouse structure itself. It felt too small to be an Iron Man kind of lab. So I duplicated the entire treehouse and added a new floor. There, I added an ugly giant telescope prototype, which I later replaced with a much better one that John 3D modeled:

After that, when I stepped into the treehouse in VR, things no longer felt as out of place as they did before. The treehouse was now starting to feel more like a solarpunk facility with multiple floors, similar to Iron Man‘s cliff-side mansion-lab, as opposed to a tiny shack on a tree. Perhaps Tablecraft’s fiction implies that the chemistry/physics work is done in the balcony area, whereas the astronomy work is done in the upper telescope floor. Likewise, perhaps there are other floors where other kinds of work is done.

This is where we stopped. It’s still a long way from being finished, but at least the core idea is there. Since then, John has been working on light-mapping the whole thing by hand, as he will explain in the devlog that kickstarted this prequel. Light-mapping by hand was a necessary evil for reasons that will be explained in his devlog.

As for the artstyle, we still need to inject more personality in it so that it doesn’t look as generic as it currently does. I definitely want to make sure we don’t fall under the Childish Danger Zone. Tablecraft is not meant to be childish. Playful, yes, but not childish. By developing a game that features educational content (which, as you might guess, could come off to some players as being potentially boring and condescending) and then making it all worse by having it look childish, we’d be shooting ourselves in the foot. There are certain aspects of the artstyle and audio that still feel very childish, so we’ll be tweaking those in the months to come.

Keep an eye out for Part 2.